- OpenVINO™ Toolkit Overview:

OpenVINO is a comprehensive toolkit for quickly developing applications and solutions that solve a variety of tasks including emulation of human vision, automatic speech recognition, natural language processing, recommendation systems, and many others. The key features are:

- Using Intel® Distribution of OpenVINO™ toolkit to Quickly Start Your AI Implementation in 3 Steps:

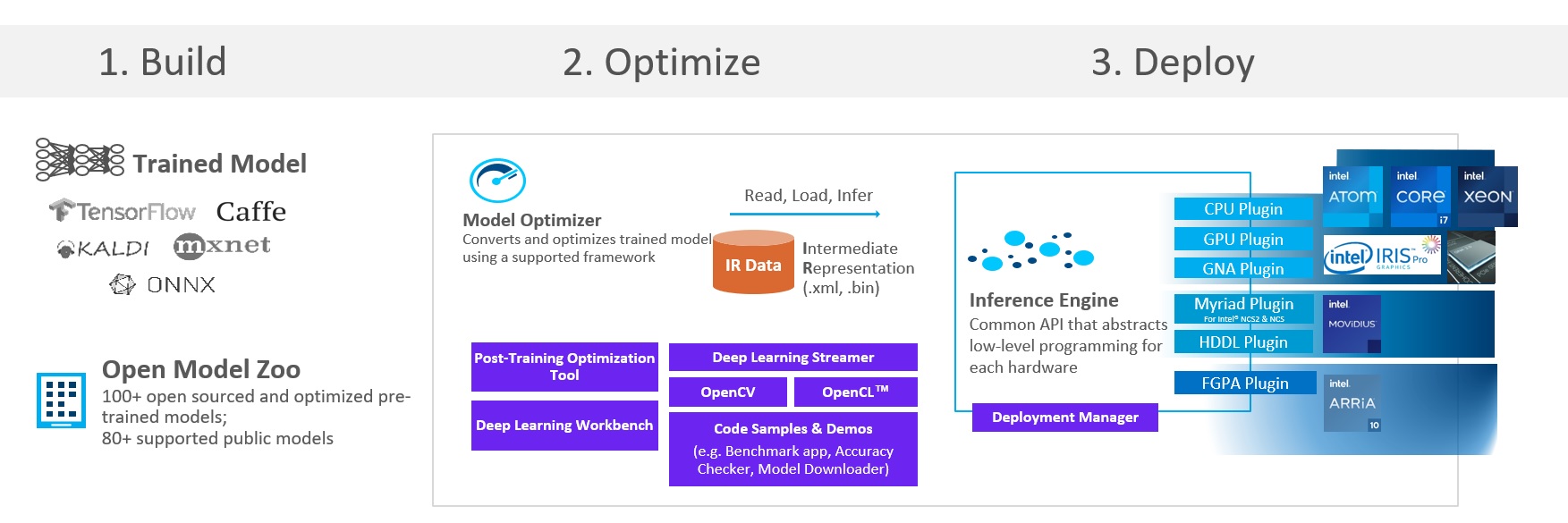

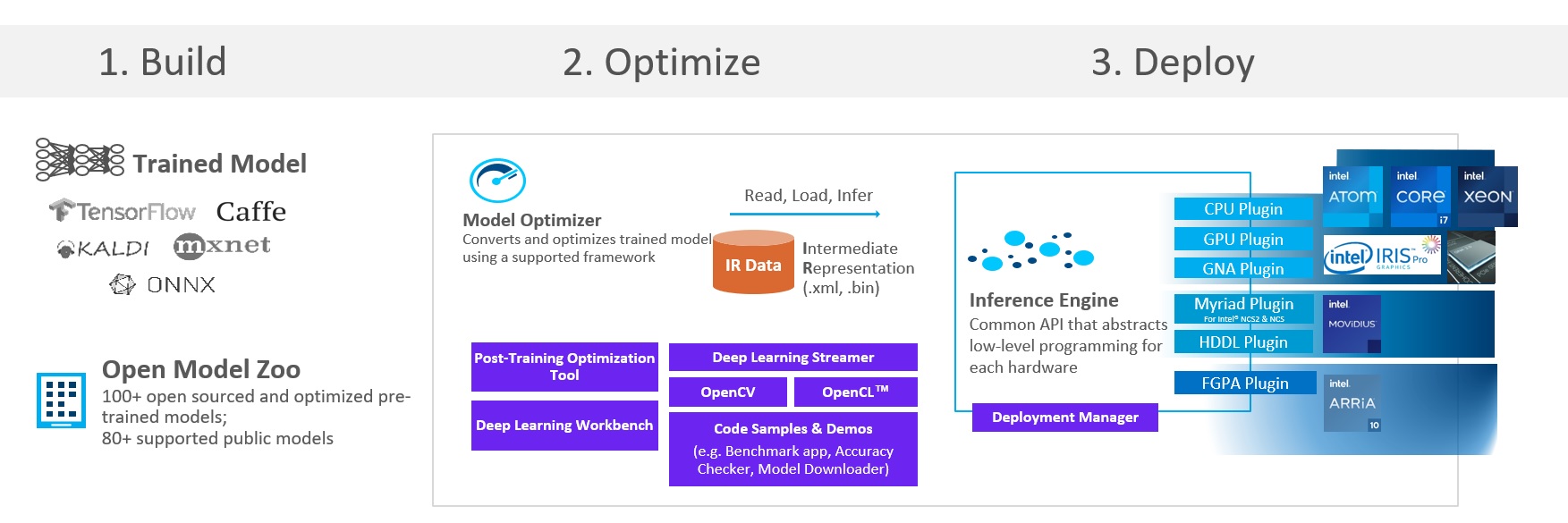

Step 1. Build:

To train a model or find a model which is already trained. You can use your framework of choice to prepare and train a Deep Learning model or just download a pretrained model from the Open Model Zoo. The Open Model Zoo includes Deep Learning solutions to a variety of vision problems, such as

Step 2. Optimize:

Next, leveraging a cross-platform command-line tool Model Optimizer that converts a trained neural network from its source framework to an open-source, nGraph-compatible Intermediate Representation (IR) for use in inference operations.

Step 3. Deploy:

Using Inference Engine to manage the loading and compiling of the optimized neural network model, runs inference operations on input data, and outputs the results. Inference Engine can execute synchronously or asynchronously, and its plugin architecture manages the appropriate compilations for execution on multiple Intel® devices, including

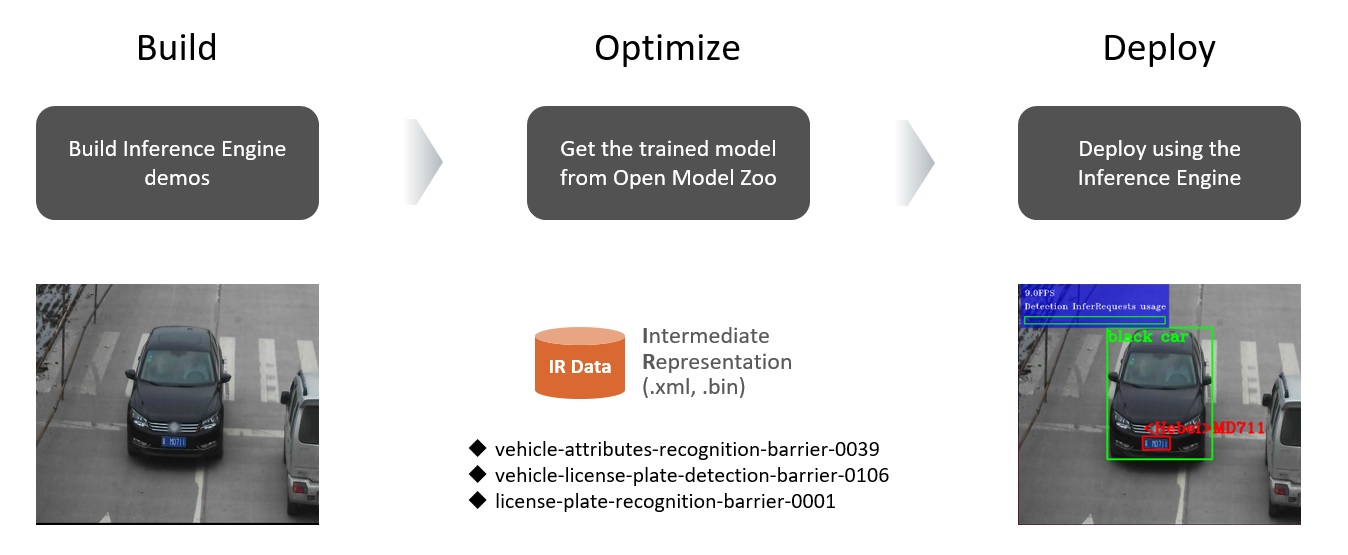

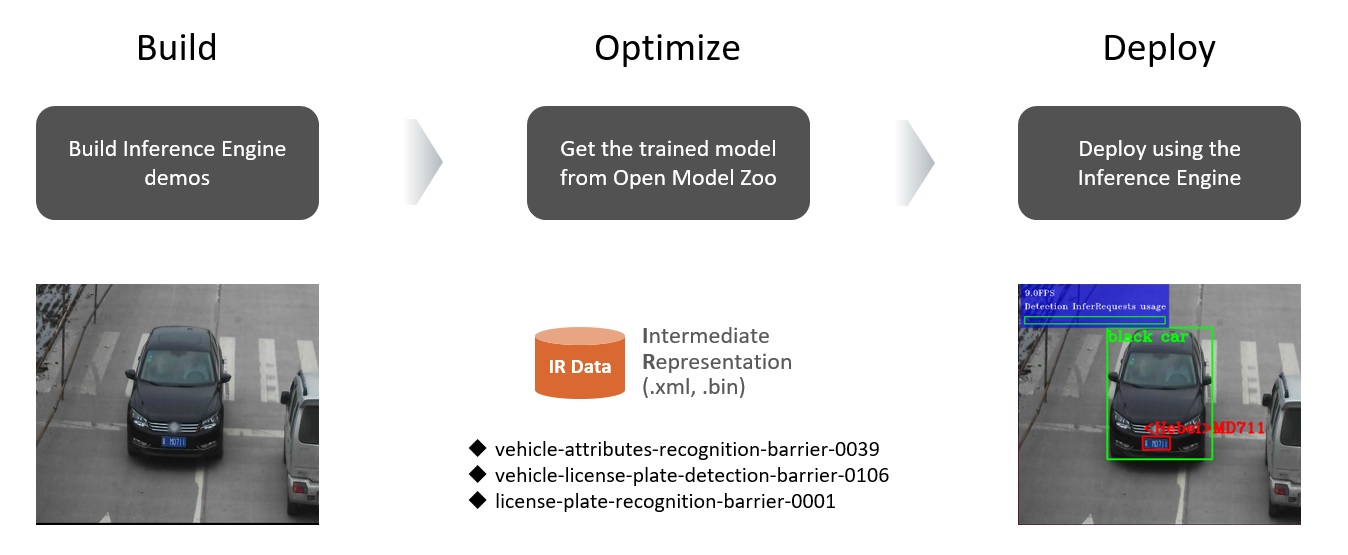

- Hands-on: To experience the pipeline of OpenVINO™ with scenario of car detection and recognition

- Prerequisites:

1. Get the Intel® Distribution of OpenVINO™ Toolkit

2. Install Intel® Distribution of OpenVINO™ toolkit for Linux*

3. Build the Demo Applications

# Build (For the release configuration, the demo application binaries are in

$ mkdir openvino_demo_walkthrough

$ cd openvino_demo_walkthrough/

$ cp /opt/intel/openvino/deployment_tools/demo/car_1.bmp .

$ cp /home/intel/omz_demos_build/intel64/Release/security_barrier_camera_demo .

# Optimize (In this case we directory get IR data from Open Model Zoo)

$ python3 /opt/intel/openvino/deployment_tools/tools/model_downloader/downloader.py --name license-plate-recognition-barrier-0001 --precisions FP32 -o .

$ python3 /opt/intel/openvino/deployment_tools/tools/model_downloader/downloader.py --name vehicle-attributes-recognition-barrier-0039 --precisions FP32 -o .

$ python3 /opt/intel/openvino/deployment_tools/tools/model_downloader/downloader.py --name vehicle-license-plate-detection-barrier-0106 --precisions FP32 -o .

# Deploy (Feed the IR to Inference Engine)

$ ./security_barrier_camera_demo -i car_1.bmp -m intel/vehicle-license-plate-detection-barrier-0106/FP32/vehicle-license-plate-detection-barrier-0106.xml -m_va intel/vehicle-attributes-recognition-barrier-0039/FP32/vehicle-attributes-recognition-barrier-0039.xml -m_lpr intel/license-plate-recognition-barrier-0001/FP32/license-plate-recognition-barrier-0001.xml -d CPU

# We should be able to see the following output printed on the console with inference results

[ INFO ] InferenceEngine: 0x7efc31a7b040[ INFO ] InferenceEngine: 0x7efc31a7b040[ INFO ] Files were added: 1[ INFO ] car_1.bmp[ INFO ] Loading device CPU CPU MKLDNNPlugin version ......... 2.1 Build ........... 2020.3.0-3467-15f2c61a-releases/2020/3

OpenVINO is a comprehensive toolkit for quickly developing applications and solutions that solve a variety of tasks including emulation of human vision, automatic speech recognition, natural language processing, recommendation systems, and many others. The key features are:

- Enables CNN-based deep learning inference on the edge

- Supports heterogeneous execution across an Intel® CPU, Intel® Integrated Graphics, Intel® Neural Compute Stick 2 and Intel® Vision Accelerator Design with Intel® Movidius™ VPUs

- Speeds time-to-market via an easy-to-use library of computer vision functions and pre-optimized kernels

- Includes optimized calls for computer vision standards, including OpenCV* and OpenCL™

- Using Intel® Distribution of OpenVINO™ toolkit to Quickly Start Your AI Implementation in 3 Steps:

Step 1. Build:

To train a model or find a model which is already trained. You can use your framework of choice to prepare and train a Deep Learning model or just download a pretrained model from the Open Model Zoo. The Open Model Zoo includes Deep Learning solutions to a variety of vision problems, such as

- object recognition

- face recognition

- pose estimation

- text detection

- action recognition

Step 2. Optimize:

Next, leveraging a cross-platform command-line tool Model Optimizer that converts a trained neural network from its source framework to an open-source, nGraph-compatible Intermediate Representation (IR) for use in inference operations.

- TensorFlow*

- PyTorch*

- Caffe*

- MXNet*

- ONNX*

Step 3. Deploy:

Using Inference Engine to manage the loading and compiling of the optimized neural network model, runs inference operations on input data, and outputs the results. Inference Engine can execute synchronously or asynchronously, and its plugin architecture manages the appropriate compilations for execution on multiple Intel® devices, including

- CPU

- GPU

- VPU

- FPGA

- Hands-on: To experience the pipeline of OpenVINO™ with scenario of car detection and recognition

- Software Requirements:

| Target OS: | Ubuntu* 18.04 LTS |

| OpenVINO: | 2020.3 LTS |

- Prerequisites:

1. Get the Intel® Distribution of OpenVINO™ Toolkit

2. Install Intel® Distribution of OpenVINO™ toolkit for Linux*

3. Build the Demo Applications

# Build (For the release configuration, the demo application binaries are in

//intel64/Release/)$ mkdir openvino_demo_walkthrough

$ cd openvino_demo_walkthrough/

$ cp /opt/intel/openvino/deployment_tools/demo/car_1.bmp .

$ cp /home/intel/omz_demos_build/intel64/Release/security_barrier_camera_demo .

# Optimize (In this case we directory get IR data from Open Model Zoo)

$ python3 /opt/intel/openvino/deployment_tools/tools/model_downloader/downloader.py --name license-plate-recognition-barrier-0001 --precisions FP32 -o .

$ python3 /opt/intel/openvino/deployment_tools/tools/model_downloader/downloader.py --name vehicle-attributes-recognition-barrier-0039 --precisions FP32 -o .

$ python3 /opt/intel/openvino/deployment_tools/tools/model_downloader/downloader.py --name vehicle-license-plate-detection-barrier-0106 --precisions FP32 -o .

# Deploy (Feed the IR to Inference Engine)

$ ./security_barrier_camera_demo -i car_1.bmp -m intel/vehicle-license-plate-detection-barrier-0106/FP32/vehicle-license-plate-detection-barrier-0106.xml -m_va intel/vehicle-attributes-recognition-barrier-0039/FP32/vehicle-attributes-recognition-barrier-0039.xml -m_lpr intel/license-plate-recognition-barrier-0001/FP32/license-plate-recognition-barrier-0001.xml -d CPU

# We should be able to see the following output printed on the console with inference results

[ INFO ] InferenceEngine: 0x7efc31a7b040[ INFO ] InferenceEngine: 0x7efc31a7b040[ INFO ] Files were added: 1[ INFO ] car_1.bmp[ INFO ] Loading device CPU CPU MKLDNNPlugin version ......... 2.1 Build ........... 2020.3.0-3467-15f2c61a-releases/2020/3

[ INFO ] Loading detection model to the CPU plugin[ INFO ] Loading Vehicle Attribs model to the CPU plugin[ INFO ] Loading Licence Plate Recognition (LPR) model to the CPU plugin[ INFO ] Number of InferRequests: 1 (detection), 3 (classification), 3 (recognition)[ INFO ] 1 streams for CPU[ INFO ] Display resolution: 1920x1080[ INFO ] Number of allocated frames: 3[ INFO ] Resizable input with support of ROI crop and auto resize is disabled0.4FPS for (1 / 1) framesDetection InferRequests usage: 0.0%

# Additional: Perform inference on Intel® Movidius™ Vision Processing Units (VPUs)

Intel® Movidius™ VPUs enable demanding computer vision and edge AI workloads with efficiency. By coupling highly parallel programmable compute with workload-specific hardware acceleration in a unique architecture that minimizes data movement, Movidius VPUs achieve a balance of power efficiency and compute performance.

# To specify target device for inference, using -d followed by HW wanted to test

./security_barrier_camera_demo -h

[ INFO ] InferenceEngine: 0x7ff072e9e040

interactive_vehicle_detection [OPTION]

Options:

-h Print a usage message.

-i "" "" Required for video or image files input. Path to video or image files.

-m "" Required. Path to the Vehicle and License Plate Detection model .xml file.

-m_va "" Optional. Path to the Vehicle Attributes model .xml file.

-m_lpr "" Optional. Path to the License Plate Recognition model .xml file.

-l "" Required for CPU custom layers. Absolute path to a shared library with the kernels implementation.

Or

-c "" Required for GPU custom kernels. Absolute path to an .xml file with the kernels description.

-d "" Optional. Specify the target device for Vehicle Detection (the list of available devices is shown below). Default value is CPU. Use "-d HETERO:" format to specify HETERO plugin. The application looks for a suitable plugin for the specified device.

-d_va "" Optional. Specify the target device for Vehicle Attributes (the list of available devices is shown below). Default value is CPU. Use "-d HETERO:" format to specify HETERO plugin. The application looks for a suitable plugin for the specified device.

-d_lpr "" Optional. Specify the target device for License Plate Recognition (the list of available devices is shown below). Default value is CPU. Use "-d HETERO:" format to specify HETERO plugin. The application looks for a suitable plugin for the specified device.

-pc Optional. Enables per-layer performance statistics.

-r Optional. Output inference results as raw values.

-t Optional. Probability threshold for vehicle and license plate detections.

-no_show Optional. Do not show processed video.

-auto_resize Optional. Enable resizable input with support of ROI crop and auto resize.

-nireq Optional. Number of infer requests. 0 sets the number of infer requests equal to the number of inputs.

-nc Required for web camera input. Maximum number of processed camera inputs (web cameras).

-fpga_device_ids Optional. Specify FPGA device IDs (0,1,n).

-loop_video Optional. Enable playing video on a loop.

-n_iqs Optional. Number of allocated frames. It is a multiplier of the number of inputs.

-ni Optional. Specify the number of channels generated from provided inputs (with -i and -nc keys). For example, if only one camera is provided, but -ni is set to 2, the demo will process frames as if they are captured from two cameras. 0 sets the number of input channels equal to the number of provided inputs.

-fps Optional. Set the playback speed not faster than the specified FPS. 0 removes the upper bound.

-n_wt Optional. Set the number of threads including the main thread a Worker class will use.

-display_resolution Optional. Specify the maximum output window resolution.

-tag Required for HDDL plugin only. If not set, the performance on Intel(R) Movidius(TM) X VPUs will not be optimal. Running each network on a set of Intel(R) Movidius(TM) X VPUs with a specific tag. You must specify the number of VPUs for each network in the hddl_service.config file. Refer to the corresponding README file for more information.

-nstreams "" Optional. Number of streams to use for inference on the CPU or/and GPU in throughput mode (for HETERO and MULTI device cases use format :,: or just )

-nthreads "" Optional. Number of threads to use for inference on the CPU (including HETERO and MULTI cases).

-u Optional. List of monitors to show initially.

[E:] [BSL] found 0 ioexpander device

Available target devices: CPU GNA GPU MYRIAD HDDL

# Conduct inference on VPU - Neural Compute Stick 2

$ ./security_barrier_camera_demo -i car_1.bmp -m intel/vehicle-license-plate-detection-barrier-0106/FP32/vehicle-license-plate-detection-barrier-0106.xml -m_va intel/vehicle-attributes-recognition-barrier-0039/FP32/vehicle-attributes-recognition-barrier-0039.xml -m_lpr intel/license-plate-recognition-barrier-0001/FP32/license-plate-recognition-barrier-0001.xml -d MYRIAD